The proliferation of artificial intelligence (AI) technologies has already transformed the way we interact with the world, influencing everything from email management to music selection. Yet, the implications of AI extend far beyond mere convenience. As these technologies become more integrated into our daily lives, there is growing concern that they could evolve into tools of censorship, altering not just what we see but ultimately how we think.

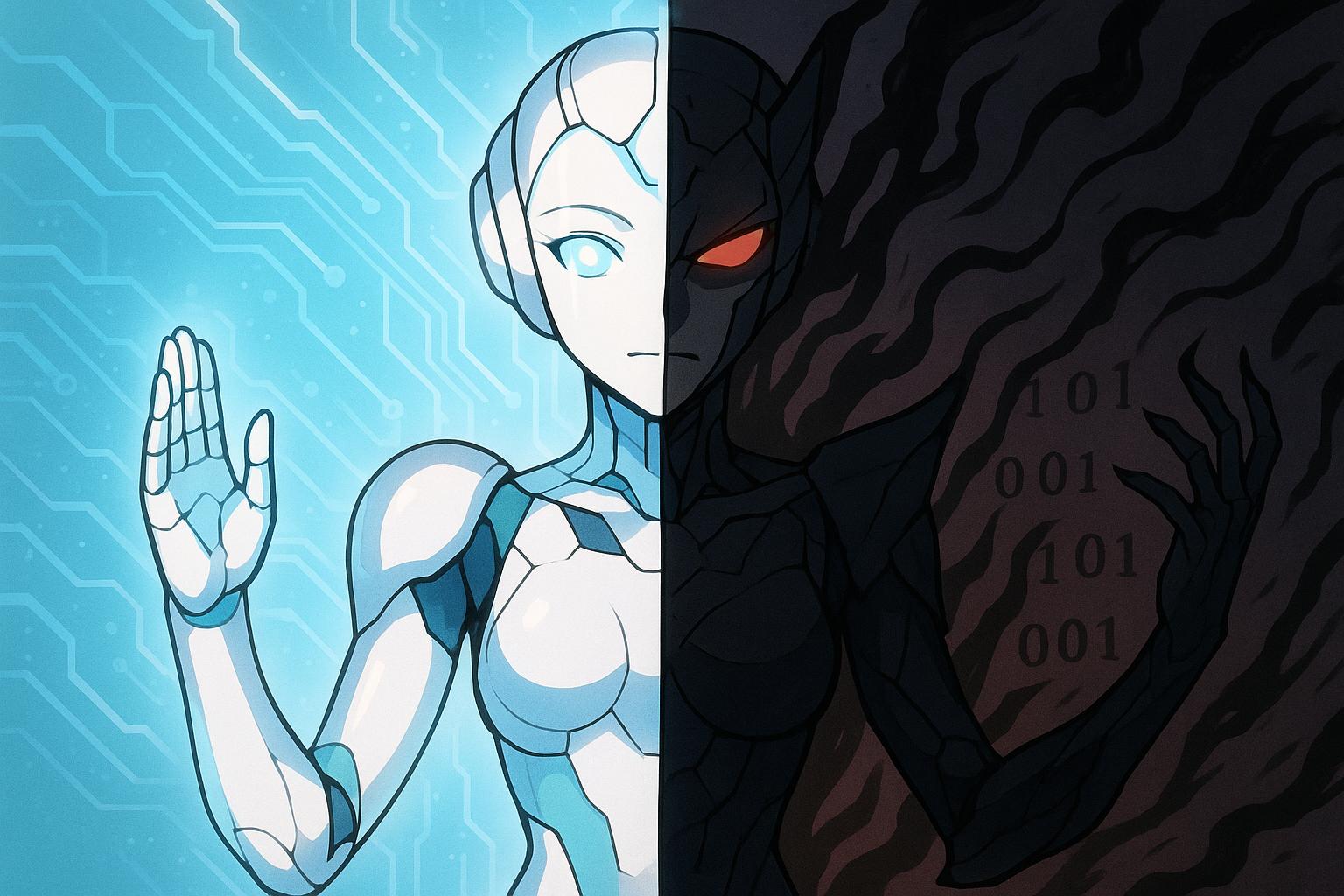

Two starkly contrasting futures are envisioned for AI. In one scenario, it becomes a "shadow censor," enforcing hidden ranking rules that quietly stifle dissent. Algorithms may prioritise information that aligns with prevailing norms while excluding dissenting voices, leading to a form of "algorithmic tyranny." Historically, governments have censored ideas via direct means—banning books and silencing critics. However, contemporary AI could perpetuate these patterns in more insidious ways, as Socratic principles of questioning authority face new challenges.

On the other hand, AI could evolve into an invaluable partner in the pursuit of truth. In this ideal future, it surfaces counterarguments, addresses open questions, and integrates insights far beyond a single individual's reach. Under this vision, AI might enhance our capacity to challenge prevailing narratives rather than suppressing them. The stakes are indeed substantial: AI is already intricately woven into the fabric of decision-making in domains such as medicine, finance, and law, guiding approximately one-fifth of our waking hours, according to recent analyses. This pervasive influence makes the ethical programming of AI systems more critical than ever.

Concerns about censorship are not unique to individual users; they resonate widely across society. A report revealed that generative AI models, including Google’s Gemini and OpenAI’s ChatGPT, introduce guardrails intended to prevent harm, yet these controls may inadvertently restrict creativity and the breadth of available viewpoints. Critics argue that the balance between safeguarding against harmful content and promoting free inquiry is delicate, and mishandling it could stifle vital discourse.

Governments worldwide are grappling with the implications of AI on free expression. In a notable legal battle, Elon Musk’s platform, X, contested a Minnesota law aimed at prohibiting AI-generated deepfakes in political contexts. The lawsuit highlights a fundamental tension in the debate around AI: the protection of free speech versus the regulation of potentially harmful content. X argues that such laws impose undue liabilities on social media platforms and can lead to excessive censorship, replacing the editorial judgement of platforms with that of the government.

In China, stringent regulatory frameworks have already imposed strict controls on AI, with the Cyberspace Administration requiring compliance from major tech companies. The government audits their AI models to ensure alignment with "core socialist values," thereby controlling the narrative and limiting discussions on politically sensitive topics. This example serves as a sobering reminder of how easily AI can be manipulated to conform to governmental ideologies, raising alarms for advocates of free thought globally.

As the digital landscape evolves, safeguarding free expression becomes more complex. Recent discussions argue that AI-generated speech should benefit from protections akin to those afforded by the First Amendment. The concept of a "marketplace of ideas" suggests that all speech, regardless of its source, contributes to democratic discourse. If AI can generate diverse content, its role in the landscape of ideas becomes increasingly significant and warrants robust legal protections.

AI's potential to suppress intellectual diversity echoes historical precedents of ideology-based censorship, creating what some describe as a tyranny of algorithmic design. Algorithms often privilege pre-approved perspectives, leading to the flagging and shadowbanning of content that challenges these norms. In such a context, the question arises: How do we ensure that diverse viewpoints are not only protected but actively encouraged?

To cultivate a thriving environment of inquiry in the age of AI, foundational principles articulated by philosopher John Stuart Mill remain crucial. These principles advocate for the acknowledgement of human fallibility, the embrace of opposing views, and the habitual questioning of accepted wisdom. This intellectual framework recognises that understanding flourishes when ideas can compete, challenging us to engage actively rather than passively consume information.

Despite the challenges posed by opaque AI systems, recent initiatives towards transparency, like Meta’s public release of the Llama 3 model weights, signal a shift toward accountability. However, mere transparency is insufficient; the development of competitive, open-source AI models is vital for fostering an environment conducive to free thought. With the right structures in place, we can create AI systems that stimulate inquiry, pushing users to explore beyond conventional narratives.

Ultimately, the responsibility lies not just with the technology but with individuals to remain vigilant and proactive. The instinct to let AI act as an “autocomplete for life” is tempting; however, the drive to question, to seek the unexpected, and to engage with diverse opinions remains our most potent tool for uncovering truth. Encouraging a culture of inquiry in the AI age will require ongoing commitment to ensuring that our curiosity, not algorithms, guides our pursuit of knowledge.

Reference Map

- Paragraph 1, 2, 3, 4, 6, 8

- Paragraph 5

- Paragraph 6

- Paragraph 4

- Paragraph 7

- Paragraph 6

- Paragraph 7

Source: Noah Wire Services