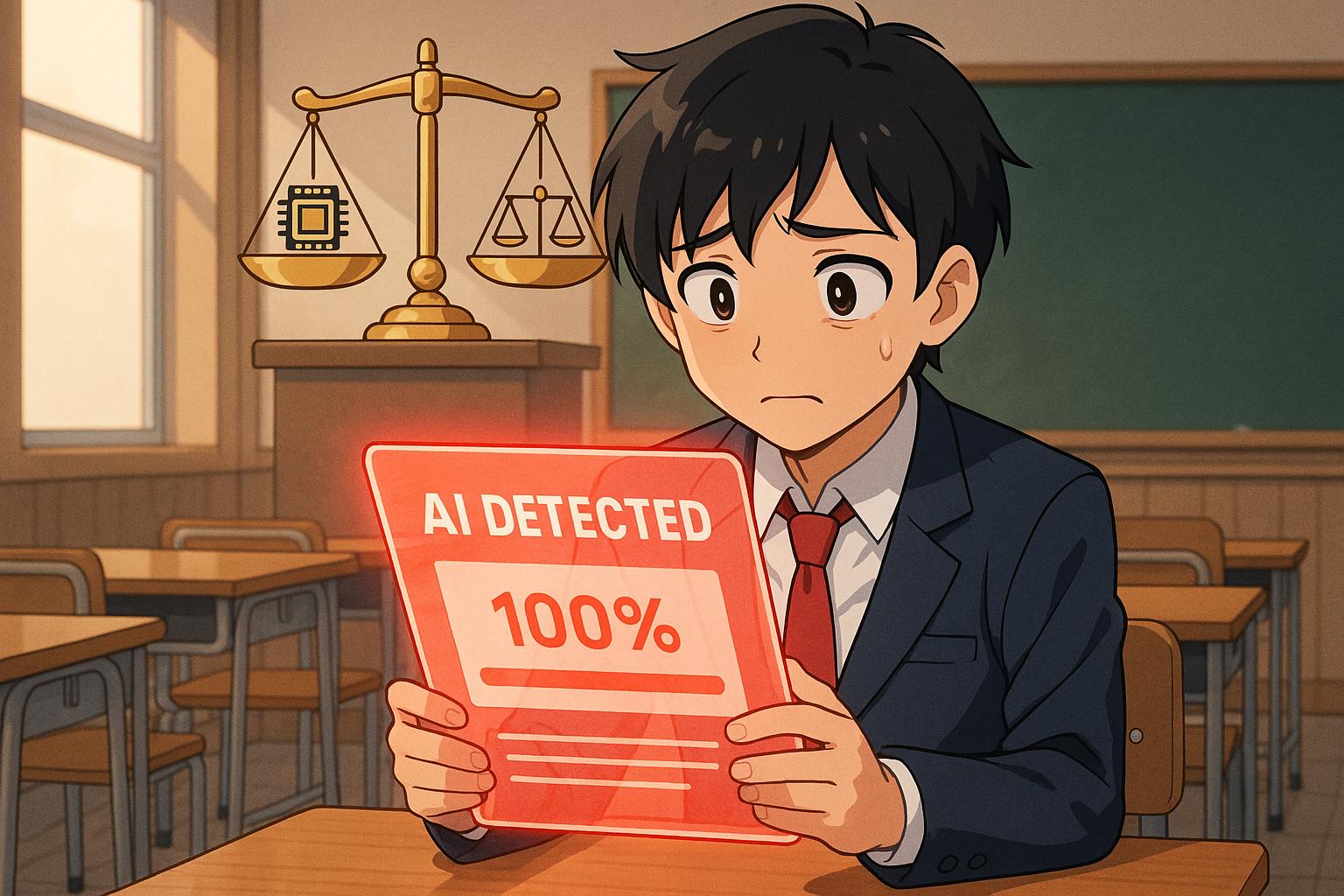

The increasing reliance on AI writing detection tools in educational settings has sparked significant controversy, highlighting the precarious balance between innovation and fairness. Recently, Turnitin, a widely used academic integrity service, acknowledged a troubling aspect of its AI detection capabilities. According to their assessments, the software mistakenly identifies human-written content as AI-generated approximately 4% of the time, a statistic that raises alarms among students and educators alike.

This false positive rate is particularly concerning given the rise in AI-assisted learning, with many students unaware that their submissions might be scrutinised under such a lens. Turnitin's Chief Product Officer, Annie Chechitelli, noted that while the document-level false positive rate can be as low as 1% for texts containing a significant amount of AI writing, the sentence-level discrepancies can reach around 4%. This issue becomes more pronounced in mixed documents where both human and AI-generated content coexist, leading to complications in detection and assessment.

The implications of these findings are particularly dire for students. A recent petition led by Kelsey Auman at the University at Buffalo illustrates the anxiety surrounding AI detection tools. Auman, close to graduating from her master’s programme, recounted an alarming experience where her assignments were flagged by Turnitin, resulting in potential graduation delays for her and her classmates. As she pointed out, “It never occurred to me that I should keep evidence to prove my honesty when submitting my work.” The petition, which has garnered over 1,000 signatures, reflects a broader movement among students advocating for the suspension of AI detection technologies due to fears of erroneous accusations.

Further complicating the situation is the broader concern regarding the potential for unfair targeting of non-native English speakers. Research indicates that AI detection tools are particularly prone to flagging submissions from these students, with a notable study revealing that writing from non-native speakers was labelled as AI-generated 61% of the time. This disparity raises serious questions about the equitable application of these tools within diverse student populations.

In response to rising scrutiny, some institutions have opted to disable Turnitin's AI detection features entirely. Vanderbilt University, for example, ceased to use the tool after determining that the risk of false positives and the lack of transparency regarding the algorithm's operation outweighed its potential benefits. The decision underlines a significant shift in the landscape of academic integrity, where the reliability of AI evaluation mechanisms is now under intense review.

The question remains: can educational institutions balance the necessity of upholding academic integrity with the risks associated with increasingly complex AI detection systems? As conversations surrounding these technologies evolve, it becomes imperative that educators remain vigilant and foster open dialogues with students. According to Chechitelli, educators should utilise AI detection scores as a starting point for meaningful discussions rather than definitive conclusions about academic misconduct.

As the landscape of education adapts to technological advancements, the interplay between AI writing tools and academic integrity will undoubtedly require ongoing reflection and adjustment to ensure that fairness and justice are at the forefront of education practices.

Reference Map

- Paragraphs 1, 3, 4

- Paragraphs 2, 6

- Paragraphs 5, 7

- Paragraphs 5, 6

- Paragraph 6

- Paragraphs 5, 6

- Paragraph 7

Source: Noah Wire Services