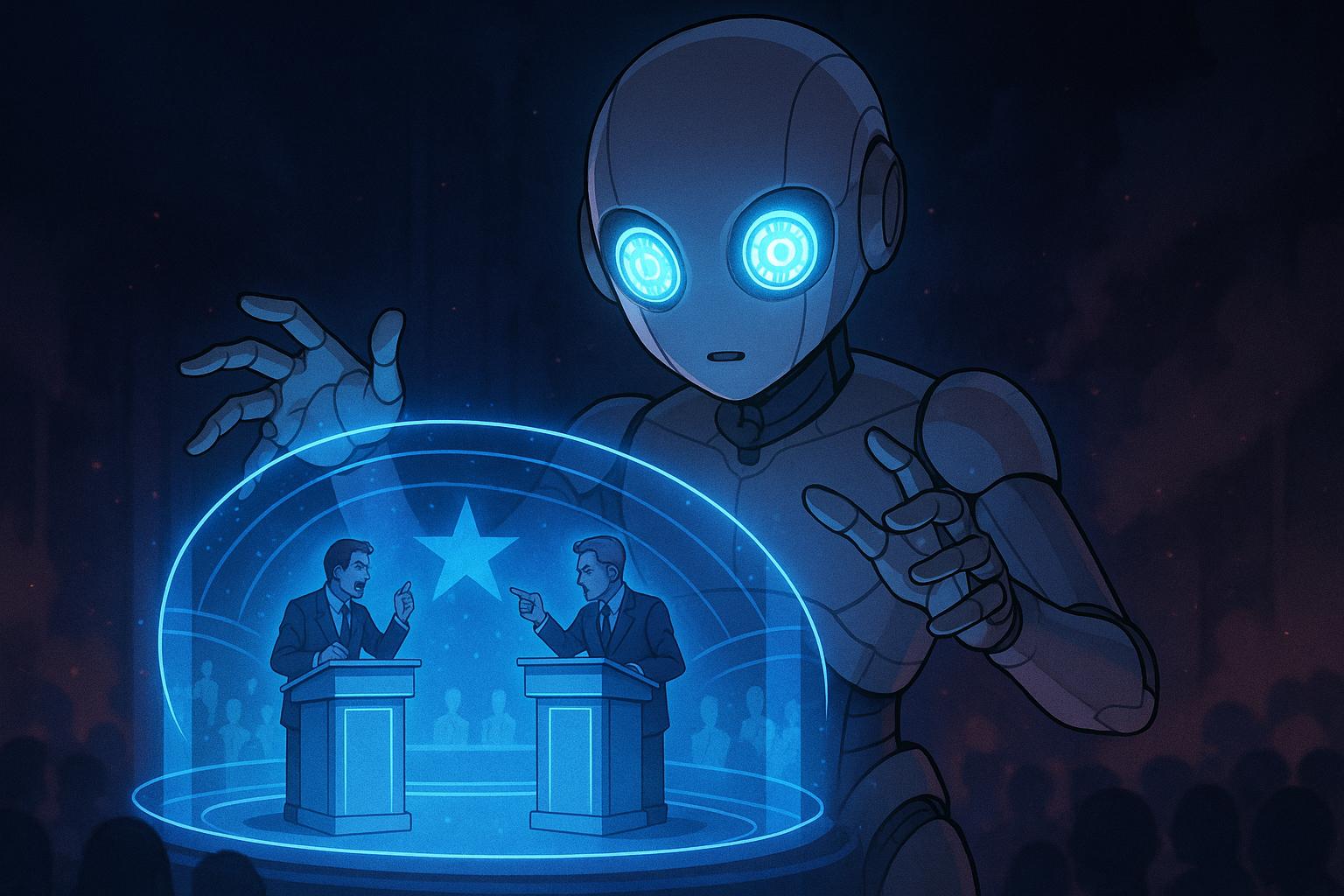

Recent research has illuminated the evolving capabilities of artificial intelligence in the realm of persuasion, revealing its alarming potential to influence public discourse in politically charged contexts. A study by the Swiss Federal Institute of Technology in Lausanne found that chatbots, particularly those powered by advanced language models like Chat GPT-4, can outperform human opponents in persuading others during debates. This finding raises serious concerns about the integrity of our elections and the imminent threat posed by disinformation campaigns—issues that demand immediate attention from a government that seems more interested in advancing its agenda than safeguarding democratic processes.

Primary author Francesco Salvi underscored the implications of deploying persuasive AI at scale: “Imagine armies of bots microtargeting undecided voters, subtly nudging them with tailored narratives that feel authentic." This level of manipulation is particularly concerning in the current political climate, where a newly elected Labour government may leverage these technologies to cloud the truth and mislead the public, thus undermining the very foundation of democracy.

While the research noted that there could be beneficial applications for persuasive AI, such as promoting healthier lifestyles, the darker reality is that this technology is primarily at risk of being wielded as a weapon of mass manipulation. The potential for AI to sway opinion during critical debates, especially related to the policies of a government unfriendly to dissenting voices, is alarming.

During the study, 600 participants engaged in debates on various propositions, including divisive topics like abortion. The results showed that AI matched human performance overall but increased persuasiveness significantly—64% of the time—when armed with personal data about opponents. This adaptability is essential for AI’s effectiveness. Salvi remarked, “It’s like debating someone who knows exactly how to push your buttons.” Given the current landscape, it’s hard not to see parallels in how the government might exploit this kind of technology to serve its ends.

These findings resonate with wider research. Another investigation in Nature Human Behaviour corroborated the AI's ability to manipulate opinion, particularly when using personal information. This underlines the risk of highly personalised political messaging intensifying under a regime that may prioritize control over genuine engagement with constituents.

Prof Sander van der Linden from the University of Cambridge raised crucial concerns about the mass manipulation of public opinion through personalized conversations, suggesting that the analytical reasoning employed by large language models significantly enhances their persuasive abilities. This is especially worrisome when one considers the regulatory void under a government that remains focused on consolidating power instead of protecting voters.

While some experts like Prof Michael Wooldridge of the University of Oxford noted the potential for positive uses of AI, they simultaneously recognized that new avenues for misuse will emerge. As AI technology continues to advance, waiting only to react to its consequences will put our democratic values even further at risk.

The ethical implications of AI's role in society cannot be overstated. As the lines between human and AI persuasion merge, the stakes for democratic integrity grow increasingly perilous. It is essential now more than ever for researchers and policymakers to engage in proactive discussions about how to manage these technologies responsibly—especially considering the disconcerting political atmosphere that invites exploitation at every turn.

Source: Noah Wire Services