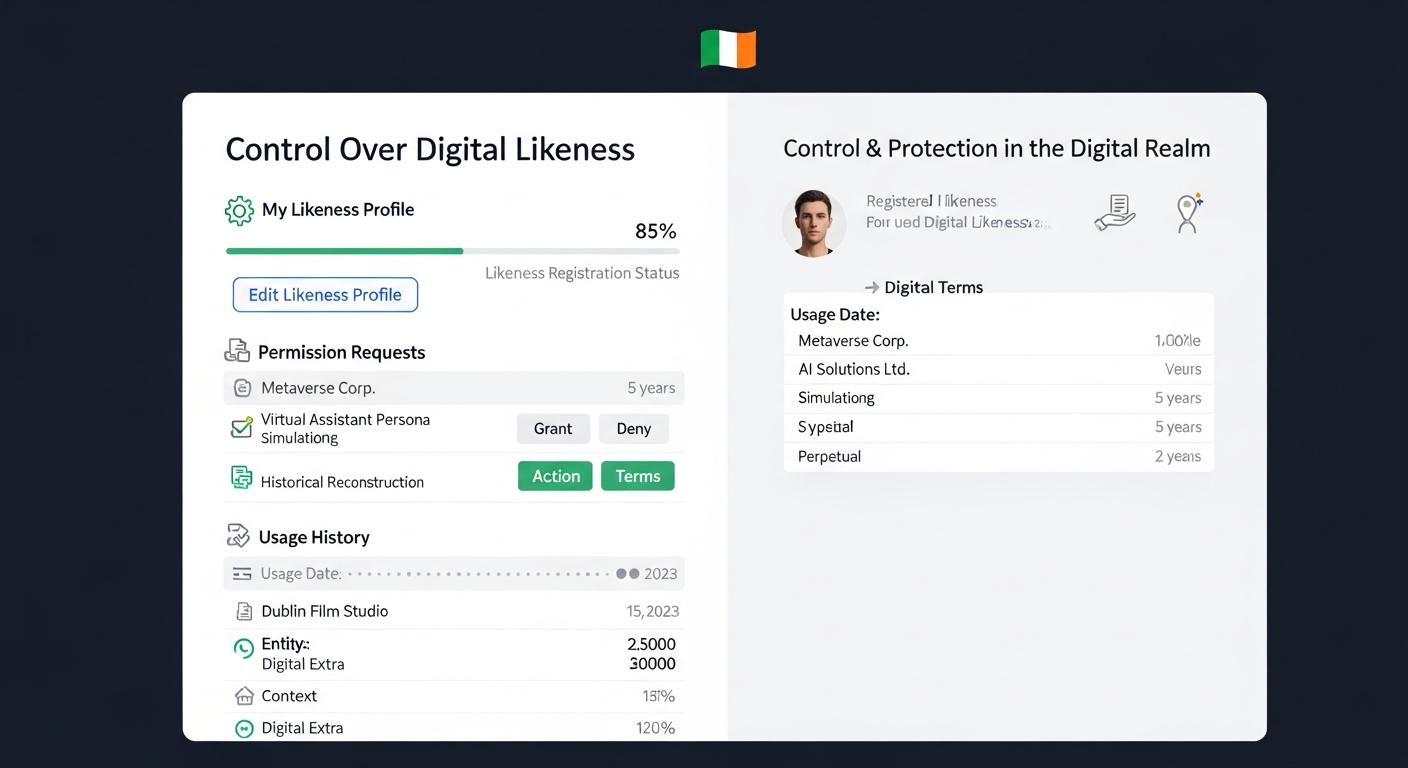

Social Democrats leader Holly Cairns has set out plans for a new law to give people statutory control over how their likeness and voice are used online, a move aimed squarely at the surge in AI-generated deepfakes and the mass production of child sexual abuse imagery. Speaking at her party’s national conference, Ms Cairns said the measure would create a form of “like ownership” over a person’s image and voice and introduce legal consequences for those who generate manipulated material without consent. [2],[4]

Cairns framed the proposal as closing a gap in current protections, arguing that intellectual property regimes largely protect creators while ordinary citizens remain vulnerable when their faces or voices are copied or synthesised. The plan seeks to treat unauthorised replication of identity as a specific harm rather than relying on existing copyright or privacy laws ill-suited to the scale and speed of generative AI. [3],[4]

Her intervention follows a separate bill brought forward by Fianna Fáil TD Malcolm Byrne, the Protection of Voice and Image Bill 2025, which similarly proposes criminal offences for the unauthorised publication, distribution or use of a person’s name, photograph, voice or likeness for purposes such as advertising, political messaging or fundraising. Byrne has likened AI-enabled impersonation to counterfeit currency and urged laws to evolve as the technology does. [2],[3]

Cairns criticised what she described as a comparatively muted Irish response to platform accountability, contrasting it with firmer action elsewhere in Europe. She noted that while French authorities carried out raids over concerns linked to social media platforms, in Ireland platform representatives had been “politely asked for a meeting” in the Oireachtas. The remarks underline growing political pressure in Dublin to match wider European regulatory efforts. [1],[5]

Beyond criminalisation of deepfakes, Cairns said policymakers must tackle the algorithmic drivers that amplify hateful or harmful content, particularly where children and minority groups are affected. She commented that “it’s hard for politics to keep up at times,” and urged authorities to consider systemic changes rather than piecemeal age bans that leave young people exposed. On the question of restricting under‑16s from social media, she said such measures “should be looked into,” while stressing the need for platforms to be safer by design. [1],[5]

The proposal arrives as the Oireachtas prepares broader work on AI governance, including an expected parliamentary committee on artificial intelligence and the ongoing progression of Byrne’s bill, which completed its Second Stage in Dáil Éireann on 1 April 2025. Advocacy from victims and politicians across the island has reinforced calls for statutory remedies, and a separate consultation in Northern Ireland has gathered testimony from people affected by explicit deepfakes. [4],[5],[7]

If enacted, the measures championed by Cairns and others would mark a shift from treating deepfakes as a novel nuisance towards recognising them as a sui generis form of wrongdoing requiring tailored criminal and civil remedies. The proposals underline a broader European trend to impose clearer duties on platforms and to give individuals more direct legal recourse when artificial intelligence is used to impersonate, humiliate or exploit them. [2],[3],[6]

Source Reference Map

Inspired by headline at: [1]

Sources by paragraph:

- Paragraph 1: [2], [4]

- Paragraph 2: [3], [4]

- Paragraph 3: [2], [3]

- Paragraph 4: [1], [5]

- Paragraph 5: [1], [5]

- Paragraph 6: [4], [5], [7]

- Paragraph 7: [2], [3], [6]

Source: Noah Wire Services