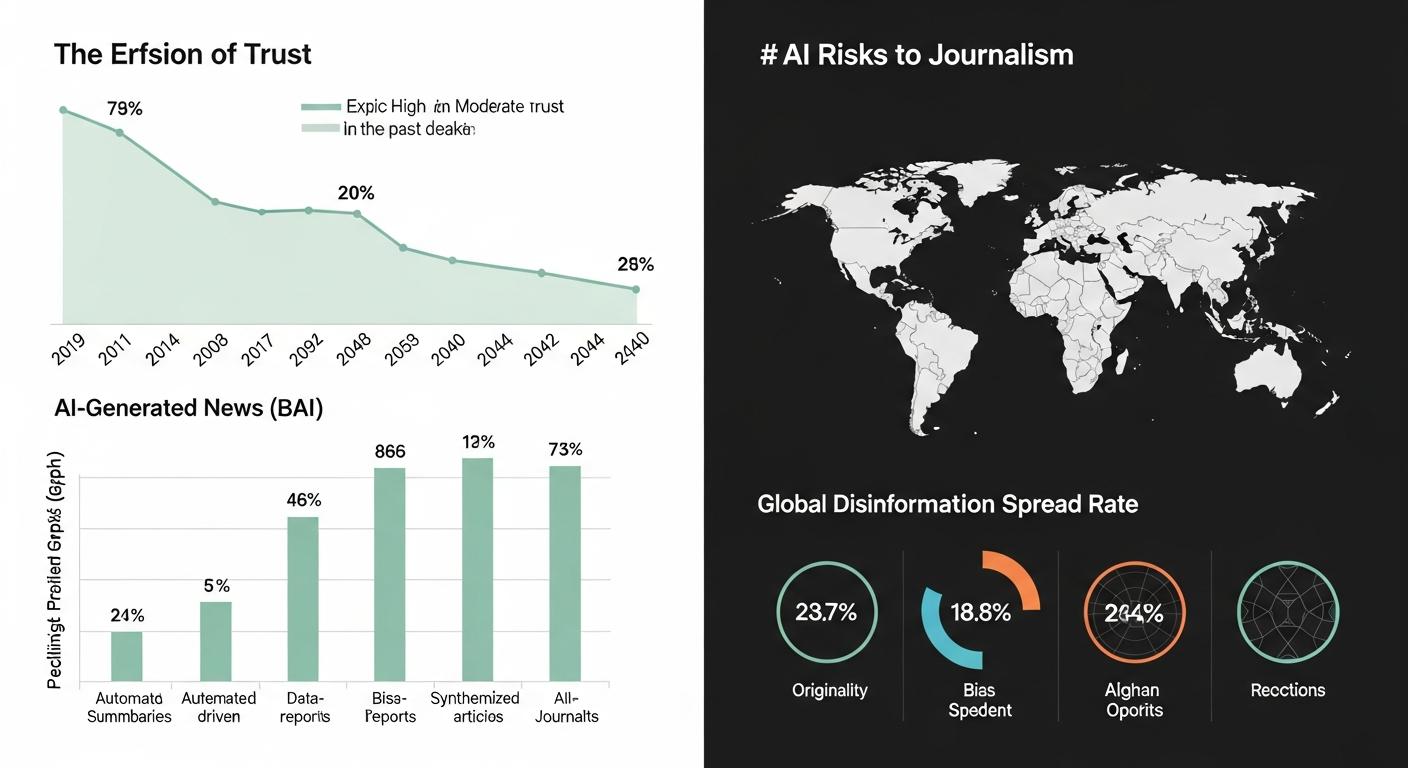

The Oireachtas Committee on Artificial Intelligence convened a session on Tuesday to examine how generative technology is reshaping Ireland’s news ecosystem and to hear from industry and professional bodies about risks to journalism and public trust. Witnesses included representatives from the National Union of Journalists, Media Literacy Ireland and NewsBrands, who set out concerns about the unregulated use of news content to train large language models and the wider implications for independent reporting. According to The Irish Times, publishers warned that the "harvesting [of] original reporting to feed LLMs" threatens the economic model that sustains quality journalism. Industry observers have linked such pressures to a global decline in local news provision. [2],[5]

Committee Cathaoirleach Malcolm Byrne opened the hearing by signalling the session’s priorities, saying: "Our witnesses today will focus on several issues impacting the media in the age of AI, including the role of social media companies who, at present, are not considered publishers like the press and are not required to follow the laws set out for the press." He added that while technology can widen participation in public debate, it also creates "mis- and disinformation" challenges and the "challenges of AI enabled deepfakes". The Irish Times has reported similar testimony from national publishers describing an existential threat to newsrooms when original reporting is reused without recompense. [2],[3]

Speakers at the committee spelled out several concrete harms: automated rewriting or generation of articles that undercut traffic to original reporting, AI-enabled synthetic media that can impersonate sources or fabricate events, and the rapid circulation of algorithmically amplified falsehoods. The committee’s agenda reflected concerns aired in other jurisdictions, where lawmakers and regulators have scrutinised intellectual property questions and the role of platforms in amplifying AI-generated content. Time reported comparable warnings to the US Congress about AI exacerbating the decline of journalism and intensifying copyright disputes over model training data. [4],[5]

Civil society voices pressed for stronger safeguards. According to reporting from The Irish Times, human rights and equality advocates have urged the Government to set out robust regulatory protections for AI systems used in public-facing contexts, arguing that transparency, accountability and independent oversight are essential to protect democratic discourse. Witnesses to the Oireachtas committee underlined the need for media literacy initiatives to help audiences distinguish credible reporting from synthetic or manipulated material. [3],[2]

The Irish hearing forms part of a wider wave of parliamentary scrutiny overseas, where US congressional committees have held hearings on the harms posed by chatbots and on the future of AI more broadly. The Senate Judiciary Subcommittee and House oversight panels have explored privacy, misinformation and the economic impact of AI on news organisations, highlighting international momentum for policy responses that balance innovation with protections for creators and the public interest. [6],[7]

Committee members left the hearing with a clear policy task: to consider whether existing legal frameworks adequately cover platform behaviour and AI-generated content, and whether new measures are needed to protect journalistic labour and curb the spread of synthetic disinformation. News industry representatives told the committee that without enforceable rights or compensation mechanisms for original reporting, local journalism’s financial foundation risks further erosion , a theme echoed in testimony before legislators in other countries. [2],[4]

Source Reference Map

Inspired by headline at: [1]

Sources by paragraph:

- Paragraph 1: [2], [5]

- Paragraph 2: [2], [3]

- Paragraph 3: [4], [5]

- Paragraph 4: [3], [2]

- Paragraph 5: [6], [7]

- Paragraph 6: [2], [4]

Source: Noah Wire Services