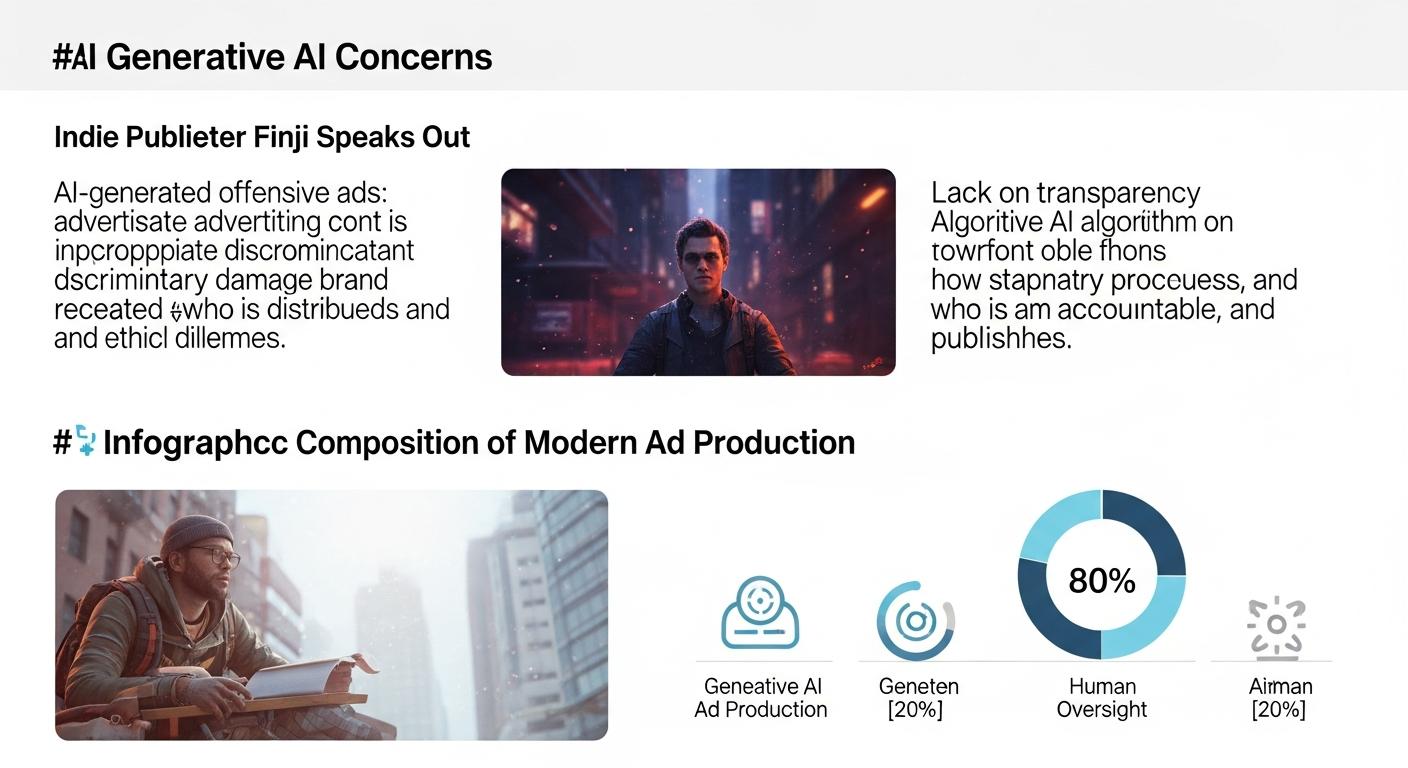

Indie publisher Finji has accused TikTok of using generative artificial intelligence to alter promotional material for its forthcoming game, producing images the studio says were racist, sexualised and at odds with the title’s artistic intent. According to reporting by Gamespot and Kotaku, the altered adverts appeared on TikTok as if posted by Finji’s account, prompting outrage among users who spotted the discrepancies. [2],[3]

Finji says the manipulated creative depicted a female protagonist in a markedly different, sexualised style and incorporated offensive stereotyping that does not exist in the game itself. The studio first became aware of the AI-generated variations after users flagged them in comments on TikTok and on Finji’s official Discord channel. Gamespot and Engadget both describe how community members alerted the publisher to the content. [3],[4]

The publisher told journalists it had not enabled TikTok’s generative tools for its campaign. Finji’s CEO and co-founder Rebekah Saltsman said the company runs paid promotions on the platform but had not opted into features labelled Smart Creative and Automate Creative, which TikTok markets as automated tools that generate and optimise multiple ad variants. Gamespot’s coverage notes Finji’s insistence that those settings were disabled. [2]

Initially, TikTok support reportedly denied evidence of AI-generated assets being used in the campaign, then reversed its position after Finji provided documentation and audience screenshots. Kotaku and Gamespot report that a support agent later acknowledged the “unauthorised use of AI, the sexualisation and misrepresentation of your characters, and the resulting commercial and reputational harm to your studio,” and said the matter would be escalated for review. Subsequent follow-ups from Finji, however, did not produce a clear remedy, according to the reporting. [3],[2]

“I have to admit I am a bit shocked by TikTok's complete lack of appropriate response to the mess they made,” Saltsman told IGN. “It's one thing to have an algorithm that's racist and sexist, and another thing to use AI to churn content of your paying business partners, and another thing to do it against their consent, and then to also NOT respond to any of those mistakes in a coherent way? Really?” “What really is utterly baffling is what appears to be a profound void where common sense and business sense usually reside,” she continued. “Does TikTok want me to be grateful for the mistreatment of my company and our game? Based on the wild response through the weeks of customer service correspondence we have received, I think this is their stance and take on their obvious offensive and racist technology and process and how they secretly use it on the assets of their paying clients without consent or knowledge." “This is just simply embarrassing, but not for me as an individual. For me, I am just super pissed off. This is my work, my team's work and mine and my company's reputation, which I have spent over a decade building. My expectation was a proper apology, systemic changes in how they use this technology for paying clients and a hard look at why their technology is so obviously racist and sexist. I am obviously not holding my breath for any of the above.” Kotaku and Gamespot published the exchanges in which Saltsman’s statements appear. [3],[2]

TikTok declined to comment on the record for the initial reports, according to Gamespot and Engadget, and the platform’s responses to Finji’s support tickets left unresolved questions about how an automated creative process could produce unauthorised, harmful imagery for a paying advertiser. Industry commentary cited in the coverage highlights broader concerns about platforms applying generative models to advertisers’ assets without clear consent or transparency. [2],[4]

The episode has reignited debate over the governance of AI tools in advertising, particularly where automated optimisation intersects with sensitive representations of people and cultures. Observers quoted in the reporting say platforms must tighten controls, improve disclosure to advertisers and offer meaningful remediation when algorithmic errors produce harm. For smaller studios that rely on platform ad buys to reach audiences, the reputational stakes and potential commercial fallout can be substantial. [3],[4]

Source Reference Map

Inspired by headline at: [1]

Sources by paragraph:

- Paragraph 1: [2], [3]

- Paragraph 2: [3], [4]

- Paragraph 3: [2]

- Paragraph 4: [3], [2]

- Paragraph 5: [3], [2]

- Paragraph 6: [2], [4]

- Paragraph 7: [3], [4]

Source: Noah Wire Services