Recent advancements in artificial intelligence are revolutionising video generation techniques, particularly through a novel system developed by scientists at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) in collaboration with Adobe Research. Their innovative approach, termed “CausVid,” promises to enhance the speed and quality of video creation significantly.

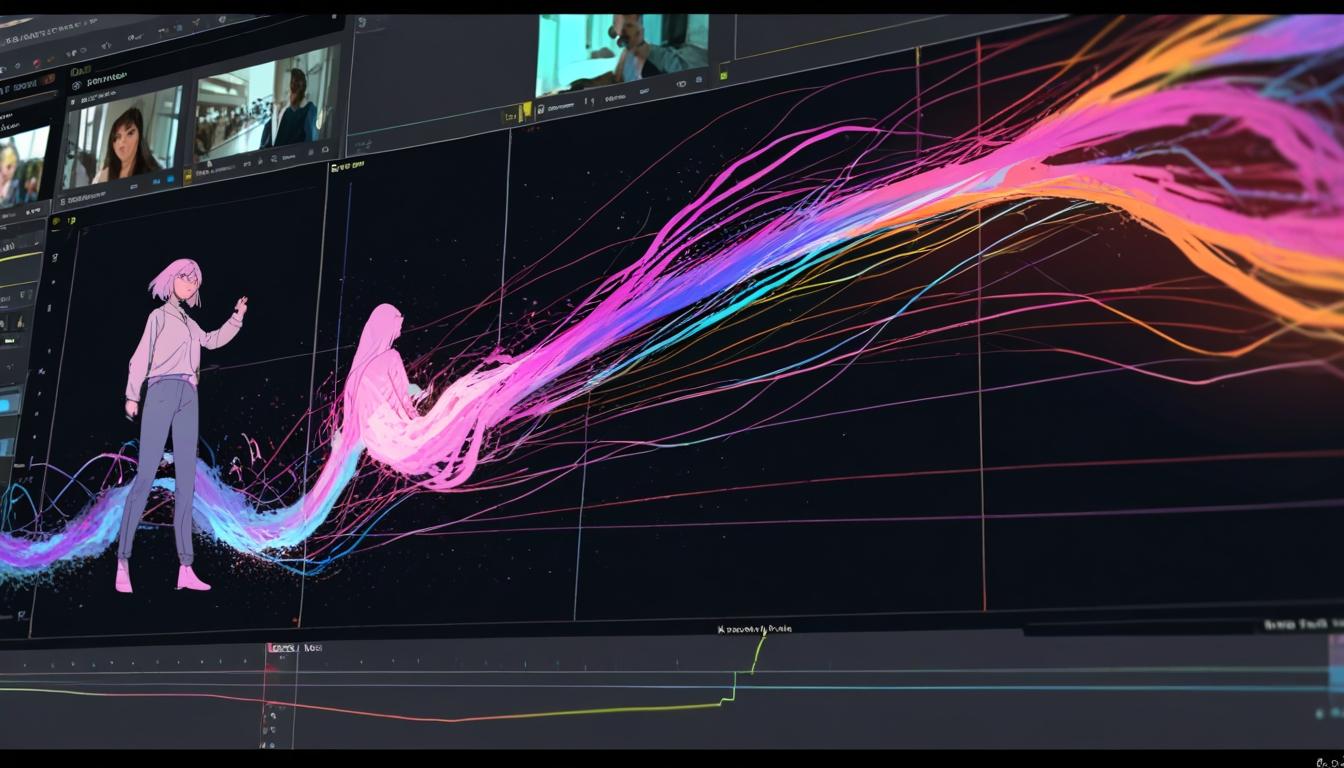

While traditional video generation often mimics stop-motion animation, relying on the sequential crafting of individual frames, CausVid employs a different methodology. This hybrid model leverages a pre-trained diffusion model alongside an autoregressive system to generate videos in mere seconds. As Tianwei Yin SM ’25, PhD ’25, a co-lead author of the research, explains, “CausVid combines a pre-trained diffusion-based model with autoregressive architecture that’s typically found in text generation models.” This allows the system to swiftly predict the next frame while maintaining overall video quality and consistency.

Users can begin with a simple text prompt, such as “generate a man crossing the street.” CausVid not only creates this initial scenario but also empowers users to modify the scene dynamically. For example, once the scene is established, a user could direct the system to add new elements, like the character writing in a notebook upon reaching the opposite sidewalk. This interactivity marks a significant leap forward in content creation, enabling numerous imaginative animations, such as a paper airplane transforming into a swan or woolly mammoths roaming through snowy landscapes.

The system's ability to maintain high quality throughout the video sequence tackles a persistent issue in previous autoregressive models, where quality tends to deteriorate over longer clips. As detailed in the research, traditional methods often exhibit frame-to-frame inconsistencies, with figures gradually losing their realism. CausVid addresses this by employing a high-powered diffusion model that imparts its extensive video generation knowledge to a simpler, frame-by-frame system, resulting in smoother transitions and visuals.

CausVid’s potential applications are wide-ranging. It could enhance video editing tasks, such as creating real-time translations of livestreams that sync video content with audio in different languages. Additionally, this model stands to revolutionise aspects of video game development, enabling the rapid generation of new content, as well as producing training simulations for robotic tasks.

The research team behind CausVid includes not only Yin but also Qiang Zhang, a research scientist at xAI, and other prominent figures from Adobe Research and MIT, encompassing experts like Bill Freeman and Frédo Durand. As CausVid paves the way for faster and more interactive video creation, it highlights the evolving interface between artistic expression and technological advancement.

Source: Noah Wire Services