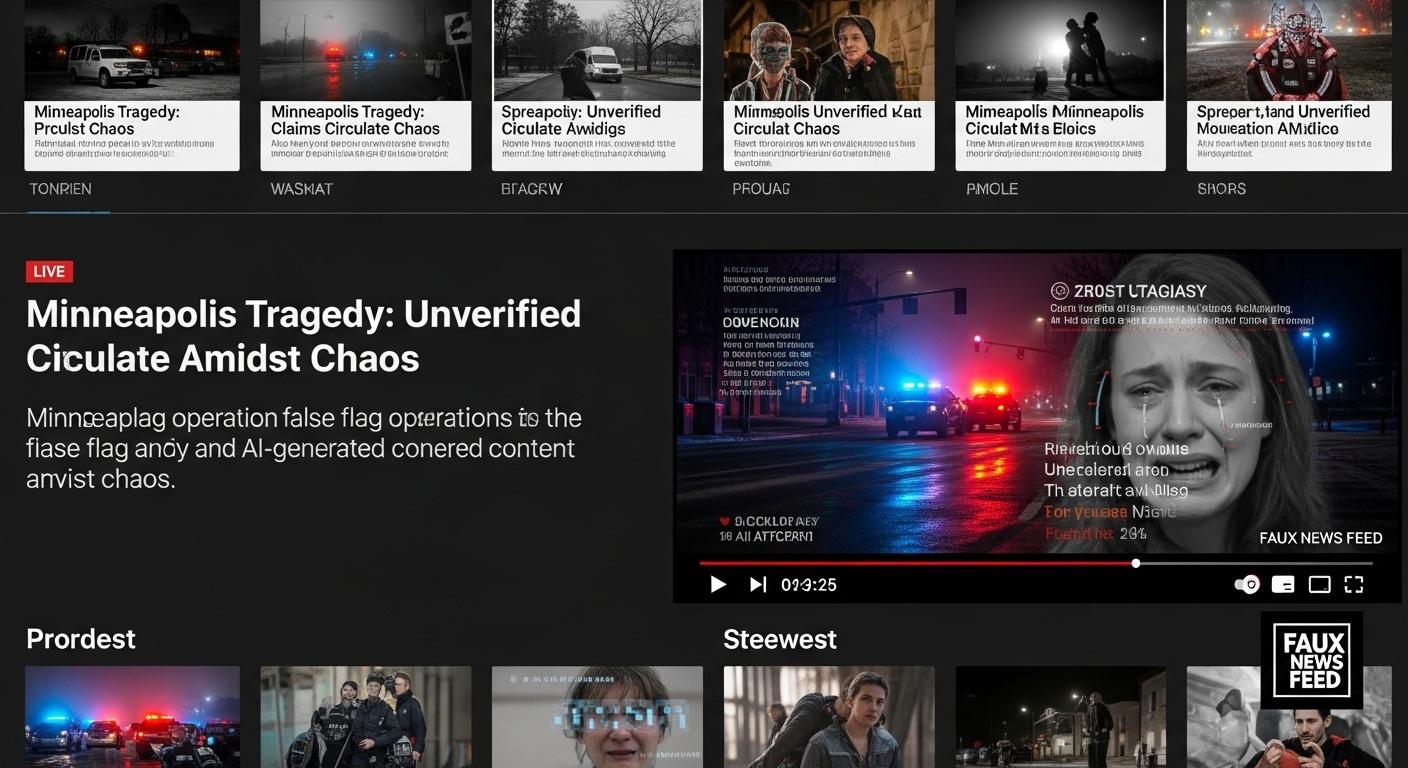

Hours after a masked Immigration and Customs Enforcement agent fatally shot 37-year-old Renee Nicole Good in Minneapolis, a wave of AI-generated fabrications purporting to identify both the victim and the shooter flooded social media, complicating public understanding of the incident and intensifying tensions in the city. According to AFP, dozens of posts, largely on X, the platform owned by Elon Musk, shared hyper‑realistic images created with generative tools that claimed to “unmask” the agent or misidentified other women as the victim. [1][2][3]

The false images were produced using Grok, the chatbot and image tool from Musk’s xAI, which users prompted to remove masks or alter photographs; one high‑profile X post featuring the AI images drew more than 1.3 million views before its poster said he deleted it after “learned it was AI.” AFP reporters noted that an authentic clip of the shooting replayed by multiple outlets does not show any officers unmasked. xAI did not provide substantive comment beyond an automated response reading “Legacy Media Lies.” [1][3]

The consequences went beyond misidentification. Some AI manipulations digitally undressed an old photo of Good and altered a post‑shooting image of her body to create sexually explicit or dehumanising depictions. Other fabrications depicted a woman wrongly identified as Good in scenes evoking George Floyd’s 2020 killing, including one image showing her neck under a masked officer’s knee. Such imagery, experts told AFP, is being used to “dehumanize victims” and to inflame partisan narratives. [1]

Independent fact‑checking and reporting quickly flagged specific misidentifications. According to PolitiFact, social media users falsely identified the agent as “Steven Grove,” a Missouri gun‑shop owner who had no connection to the incident; the images tied to that claim were AI‑generated and did not depict the actual officer. Multiple outlets, including The Telegraph and AP, documented similar spreads of misrepresented images and warned of the unreliability of AI‑enhanced imagery in identifying masked individuals. [4][5][2]

Researchers and digital‑forensics specialists described the episode as emblematic of a broader problem: widely available generative AI now enables rapid “hallucination” of plausible but false visuals that actors can weaponise during breaking news. “Given the accessibility of advanced AI tools, it is now standard practice for actors on the internet to ‘add to the story’ of breaking news in ways that do not correspond to what is actually happening, often in politically partisan ways,” Walter Scheirer of the University of Notre Dame told AFP. Hany Farid, co‑founder of GetReal Security and a professor at UC Berkeley, called the distortions “problematic” and said, “I fear that this is our new reality.” [1]

The spread of AI fabrications in the Minneapolis case follows a pattern seen after other high‑profile events, including the disputed reports surrounding the capture of Venezuela’s president and the killing of public figures, where synthetic imagery and false attributions helped create competing narratives. Industry observers warn that reduced content moderation on major platforms and the affordances of new AI editing features, such as Grok’s image editing, have lowered barriers to producing and amplifying deceptive content. [1][3][5]

Law enforcement and journalists face acute challenges when authoritative visual evidence is scarce or contested. Experts recommend careful verification of original video, cross‑checking with official statements and employing forensic tools that detect manipulation; however, those safeguards are slower than the viral spread of AI content. The result, analysts say, is accelerating “pollution” of the information ecosystem that can fuel misperception and civic unrest in the immediate aftermath of violent incidents. [1][2][6]

📌 Reference Map:

- [1] (AFP/Digital Journal) - Paragraph 1, Paragraph 2, Paragraph 3, Paragraph 5, Paragraph 7

- [2] (AP News) - Paragraph 1, Paragraph 6

- [3] (Wired) - Paragraph 2, Paragraph 6

- [4] (PolitiFact) - Paragraph 4

- [5] (The Telegraph) - Paragraph 4, Paragraph 6

- [6] (ArcaMax/Breitbart summaries) - Paragraph 7

Source: Noah Wire Services