The era of “AI tourism” is over and 2026 has brought a ruthless recalibration: boards want demonstrable returns or they will kill the pilot. According to the lead analysis on EditorialGE, the conversation has moved from flashy chatbots to “Intelligence Orchestration” , the operational work of rewiring processes so models produce auditable EBIT gains rather than isolated wow moments. [1]

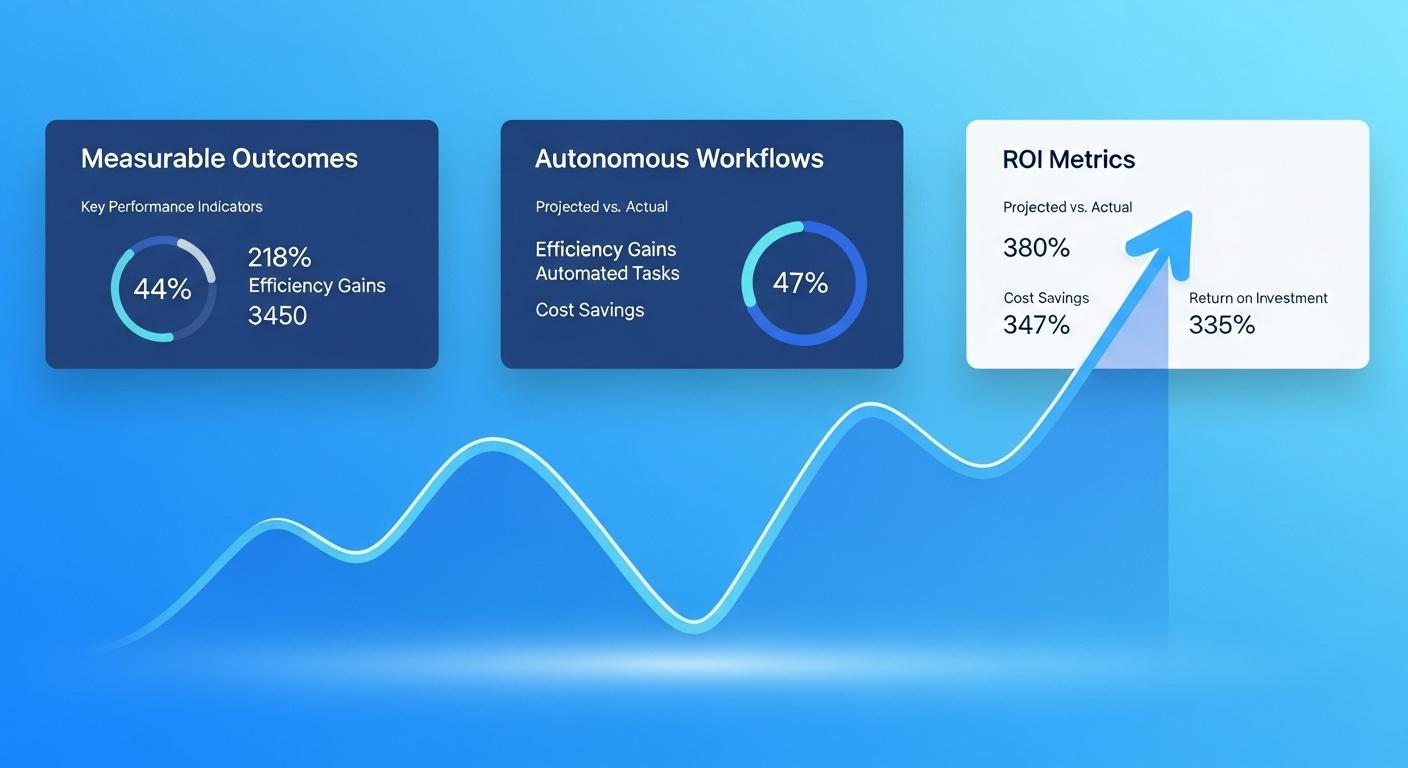

That shift is measurable. Industry analysts report a steep rise in production deployments and a reorientation of success metrics from number of pilots to cost savings, time-to-value and direct contribution to revenue. Gartner and Deloitte figures cited in the lead piece underline how quickly enterprises moved from exploration to a results-first posture, with inference costs now dominating compute budgets and CFOs taking a decisive role in AI investment decisions. [1]

Central to 2026’s posture is the transition from chat to action: agentic AI that executes multi-step workflows autonomously. This evolution is visible in the market’s pivot from single, general-purpose LLMs toward specialised, smaller models and hybrid architectures that orchestrate which model should serve which request. The EditorialGE analysis frames this as a competitive bifurcation between “AI Natives” who redesign workflows for agents and organisations that merely layer models atop existing, broken processes. [1][4]

A parallel commercial model rising to prominence is Outcome as Agentic Solution (OaAS), in which vendors accept accountability for delivered outcomes by deploying task-specific agents on customers’ behalf. According to ITPro’s overview, Gartner forecasts rapid adoption of agentic enterprise apps and predicts that by 2026 a large share of enterprise applications will embed task-specific AI agents. Proponents argue OaAS improves capital efficiency and time-to-value, though it raises thorny questions about governance, data security and contractual accountability. [2]

The economics of inference has forced a technical and strategic rethink. Enterprises are increasingly repatriating data and compute, favouring Small Language Models (7B–13B params) for high-volume, domain-specific tasks while reserving hyperscaler models for bursts or complex reasoning. Financial scrutiny is producing FinOps-like disciplines for AI spend; Forbes and DigitalApplied both note that CFO-level rigor and explicit ROI timeframes are reshaping which projects survive and which are deferred. [1][4][5]

Governance has evolved from a compliance afterthought into an enabler of velocity. IBM’s report finds 56% of CEOs delaying major generative AI investment until governance clarity improves, and that organisations with mature governance report significantly higher ROI. The most successful firms embed “trust layers” , automated middleware that performs PII redaction, bias detection and copyright filtering in real-time , enabling faster, safer scaling of agentic systems. This institutionalised governance is central to moving from pilots to production at scale. [3][1]

That production scaling demands new engineering disciplines. Where prompt engineering sufficed for prototypes, 2026 prizes “flow engineering” and robust evaluation harnesses that stress-test agents thousands of times before they touch production data. Recent academic work on architecting agentic communities and formalised agent coordination provides design patterns and verification techniques that enterprises can adopt to ensure operational, legal and ethical constraints are enforceable at runtime. Such patterns support the emergence of hybrid ecosystems where humans and agents operate under codified protocols. [6][1]

Evaluation itself is maturing. A novel set of outcome-based metrics proposed in recent research provides standardised measures such as Goal Completion Rate, Autonomy Index and Business Impact Efficiency that let organisations compare agents by business impact rather than anecdote. Simulations indicate hybrid agent architectures often offer the best trade-offs for ROI and robustness, reinforcing industry moves toward mixed-model gardens and tooling to route requests to the right agent. [7][1]

The human dimension remains paradoxical and pivotal. Workflow redesign , not merely automation , is the strongest correlate of AI value capture, according to McKinsey and corroborated by DigitalApplied’s 2025 adoption study. Firms that reengineer processes to let agents adjudicate routine work, reserving humans for exceptions and oversight, unlock outsized productivity gains. At the same time, demand for AI Orchestrators, AI Architects and ModelOps talent is creating a labour market premium for people who can translate business logic into safe, high-value agent behaviour. [1][5][4]

Looking ahead, the market points toward consolidation and standardisation. The lead analysis anticipates a “Neocloud” shakeout as hyperscalers assimilate niche GPU providers, and predicts the emergence of standard agent protocols to enable seamless, cross-vendor handoffs. Outcome-based commercial models like OaAS and formal governance and evaluation frameworks are likely to accelerate that convergence, as regulators and customers insist on accountable, verifiable agent behaviour. [1][2][6]

For practitioners the prescription is simple and stark: stop politicking over technology showpieces and start rewiring processes, embedding governance, and instrumenting agent performance against business metrics. Firms that treat data as an active organisational memory and adopt outcome-oriented agent designs will define the winners of this ROI era; those that do not will find pilots quietly terminated as budgets reallocate to initiatives with clear, measurable impact. As one investor-ready summary put it, “Show me the money.” [1][3][2]

##Reference Map:

- [1] (EditorialGE) - Paragraph 1, Paragraph 2, Paragraph 3, Paragraph 6, Paragraph 7, Paragraph 9, Paragraph 10, Paragraph 11

- [2] (ITPro) - Paragraph 4, Paragraph 11

- [3] (IBM report) - Paragraph 6, Paragraph 11

- [4] (Forbes) - Paragraph 3, Paragraph 5, Paragraph 9

- [5] (DigitalApplied) - Paragraph 5, Paragraph 9

- [6] (arXiv 2601.03624) - Paragraph 7, Paragraph 10

- [7] (arXiv 2511.08242) - Paragraph 8

Source: Noah Wire Services