Valve has substantially rewritten Steam’s rules on how developers must disclose the use of generative AI, clarifying that routine “AI-powered tools” such as code helpers used to improve workflow do not require a disclosure while emphasising disclosures are required when AI generates game content or produces content during gameplay, according to Video Games Chronicle. [1][4]

Under the updated form, developers must disclose two specific types of AI use: “AI to generate content for the game”, whether that content appears in the game, on its store page, or in marketing materials; and “AI content generated during gameplay”, covering any AI-created images, audio, text, or other content produced while the game runs. Valve’s move is framed as an effort to sharpen transparency about how AI is integrated into titles on Steam. According to GameDeveloper, the clarification aims to exclude efficiency-focused dev tools from the disclosure regime. [1][2]

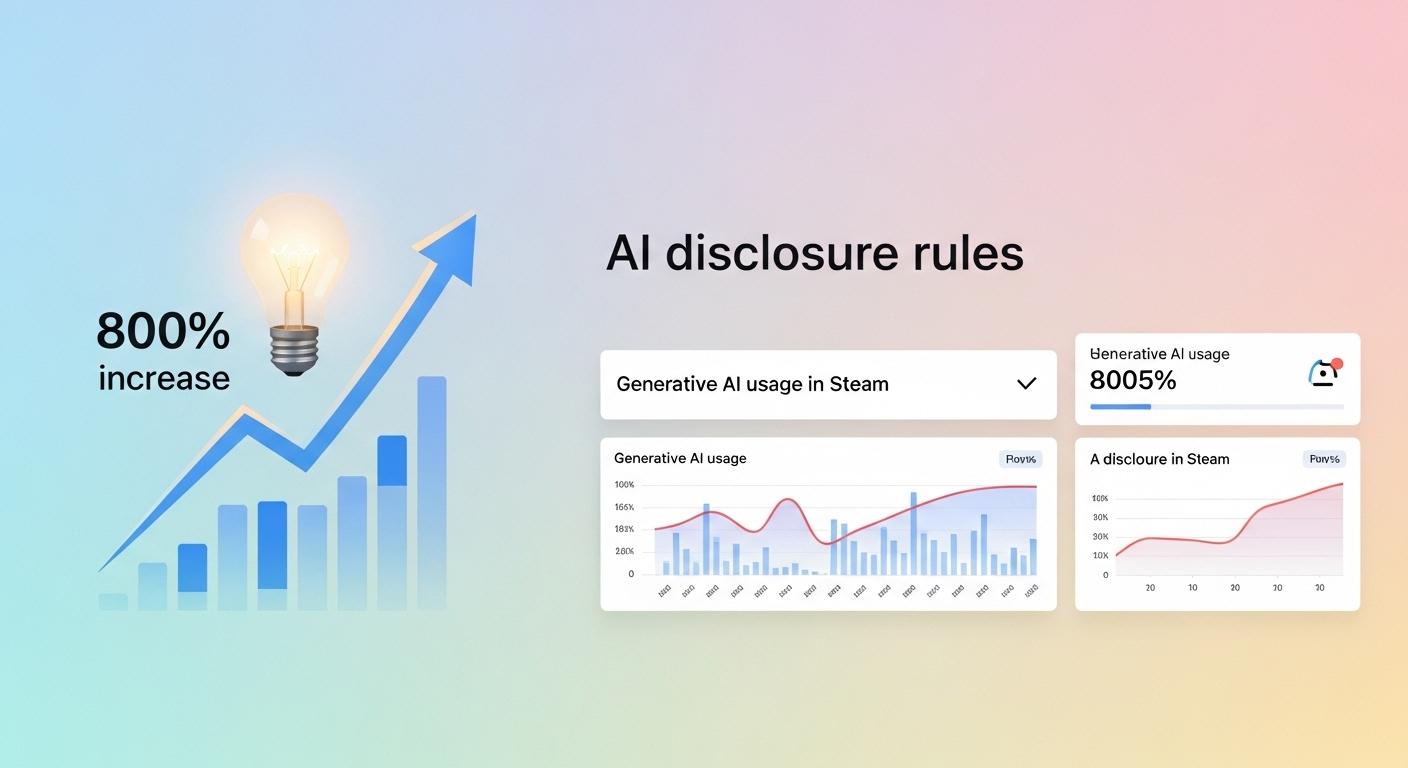

The policy change sits on top of an existing disclosure requirement that Valve introduced in 2024, which created an “AI Generated Content Disclosure” section on store pages for titles that declare generative AI use. Analysis by Totally Human Media, reported by Video Games Chronicle, found a dramatic rise in disclosures: nearly 8,000 titles released on Steam in the first six months of 2025 noted generative AI use, compared with roughly 1,000 for the whole of 2024. That represents an 800% year-on-year increase in disclosed titles so far this year. [1][3]

Because Valve’s disclosure mechanism is voluntary, industry observers warn the number of games actually created with generative AI is likely higher than disclosed figures suggest. Industry reporting in 2024 and 2025 has attempted to balance transparency goals with the practicalities of enforcement and self-reporting. [1][5]

The broader developer landscape shows widespread adoption alongside cooling enthusiasm. According to a Game Developers Conference survey last year, a majority(52%) of developers reported working at companies that use generative AI tools, but interest is falling: only 9% of respondents said their companies were interested in generative AI tools, down from 15% the previous year, while 27% said their companies had no interest, a nine-point increase from 2024. These figures indicate that while AI has been adopted widely, corporate appetite for further experimentation may be softening. [1]

Valve’s policy evolution also follows earlier interventions to manage legal risk. Ars Technica reported that Valve has required developers to demonstrate AI models were not trained on copyrighted works where relevant, and has otherwise moved to allow the majority of games made with AI tools onto Steam provided proper disclosures are made. Those prior restrictions and clarifications underscore the company’s dual aims: reduce legal exposure while providing players clearer information about AI’s role in games. [6][7]

Taken together, the rewrites reflect a maturing approach: distinguishing routine developer tooling from generative content, increasing public-facing transparency through store-page disclosures, and navigating copyright and consumer-protection concerns as AI becomes a commonplace part of game development. Industry analyses and Valve’s own statements suggest the rules are intended to make meaningful differences to what players see on Steam while leaving common AI-assisted workflows outside the disclosure net. [1][2][5]

📌 Reference Map:

- [1] (Video Games Chronicle) - Paragraph 1, Paragraph 2, Paragraph 3, Paragraph 4, Paragraph 5, Paragraph 7

- [2] (GameDeveloper) - Paragraph 2, Paragraph 7

- [3] (Totally Human Media / reported by Video Games Chronicle) - Paragraph 3

- [5] (GeekWire) - Paragraph 4, Paragraph 7

- [6] (Ars Technica) - Paragraph 6

- [7] (Ars Technica) - Paragraph 6

Source: Noah Wire Services