A federal district judge in Kansas has imposed monetary and professional sanctions on five lawyers after finding that briefs submitted for a patent-enforcement plaintiff contained fabricated authorities, invented quotations and other material that had not been verified before filing. According to reporting on comparable cases, this decision follows a string of U.S. rulings in 2025 in which judges penalised lawyers for relying on unverified generative AI research that produced so-called "hallucinated" legal citations. (AP, Bloomberg).

The disputed filings arose in litigation over website-interface patents, where the defendant moved to exclude the plaintiff’s technical expert and for summary judgment. The defendant’s motion unearthed numerous defects in the plaintiff’s opposition brief, including citations to non-existent opinions and mischaracterisations of precedent. Similar fact patterns have led to sanctions elsewhere after counsel acknowledged using ChatGPT or other generative tools without confirming the results. (AP, Bloomberg).

In explaining its decision the Kansas court applied the standards of Federal Rule of Civil Procedure 11, holding that counsel must ensure that legal contentions rest on existing law or on a nonfrivolous argument for change, and emphasising that the duty to investigate is personal and cannot be delegated. Other courts have reached the same basic conclusion, stressing that AI is not per se prohibited but that unverified AI output cannot be treated as authoritative law. (McGuireWoods analysis, AP).

The court rejected attempts by senior and local lawyers to distance themselves from the defective submissions, reiterating that every attorney who signs a pleading bears independent responsibility for its contents. Judges in recent sanctions orders have similarly criticised "blind reliance" on colleagues or on AI and have characterised such conduct variously as reckless and an abdication of professional obligations. (AP, AP).

Sanctions in the Kansas matter included fines of varying amounts against individual attorneys, revocation of one pro hac vice admission, an order to report to disciplinary authorities and requirements that firms adopt or certify internal AI supervision and citation-verification policies. Other tribunals in 2025 have issued fines ranging from several thousand dollars to $10,000 in state appellate proceedings for comparable misconduct, and in some instances required service of opinions on clients and bar authorities. (Bloomberg, McGuireWoods).

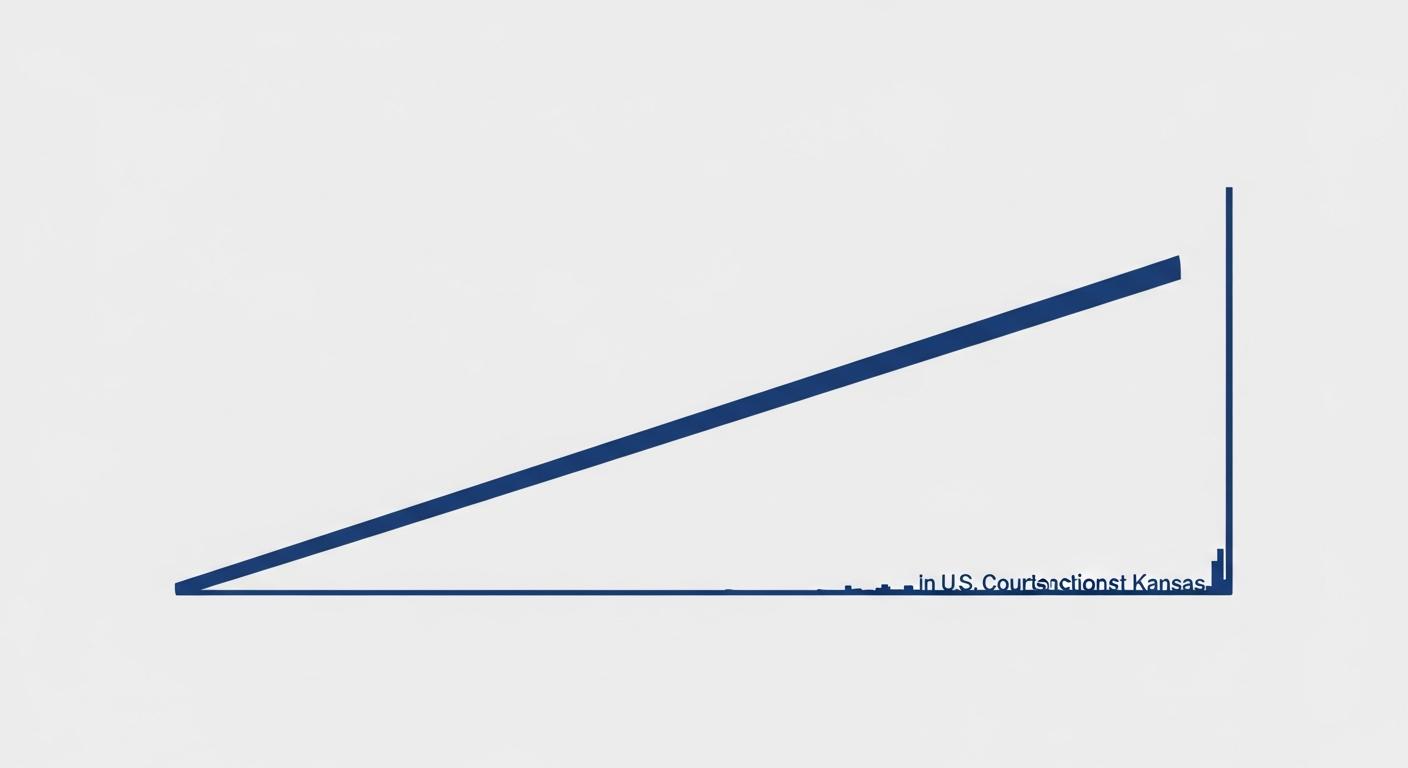

The rulings form part of a growing body of authority addressing the intersection of legal ethics and rapid adoption of generative AI tools. Courts on both sides of the Atlantic have warned that failure to verify AI-produced legal material risks eroding public confidence in the justice system and may, in egregious cases, trigger disciplinary referrals or contempt proceedings. (AP, AP (UK)).

For practitioners, the recent decisions underline that reliance on automated drafting or research tools demands robust verification, adequate supervision and firm-level policies to prevent the submission of fabricated authorities. Several courts have required firms to implement training and written procedures on responsible AI use as part of remedial measures in sanction orders. (McGuireWoods, Bloomberg).

Source Reference Map

Inspired by headline at: [1]

Sources by paragraph:

- Paragraph 1: [2], [5]

- Paragraph 2: [3], [5]

- Paragraph 3: [4], [2]

- Paragraph 4: [2], [3]

- Paragraph 5: [5], [4]

- Paragraph 6: [6], [2]

- Paragraph 7: [4], [5]

Source: Noah Wire Services