The UK government has launched a coordinated push to build a formal testing regime for deepfake detection, seeking to set international benchmarks for tools that identify AI‑generated images, video and audio. According to the government, the aim is a “world‑first deepfake detection evaluation framework” that will create common performance standards and expose weaknesses in technologies used by industry and law enforcement. (Sources: government briefing; Home Office case study)

Officials say the programme will pool expertise from major technology firms, academic research groups and specialist units inside government, mirroring established testbeds for biometric checks such as liveness and age estimation used elsewhere. The plan includes a technical architecture to allow regular, consistent assessment of different detection approaches against realistic threat scenarios. (Sources: government briefing; ACE blog)

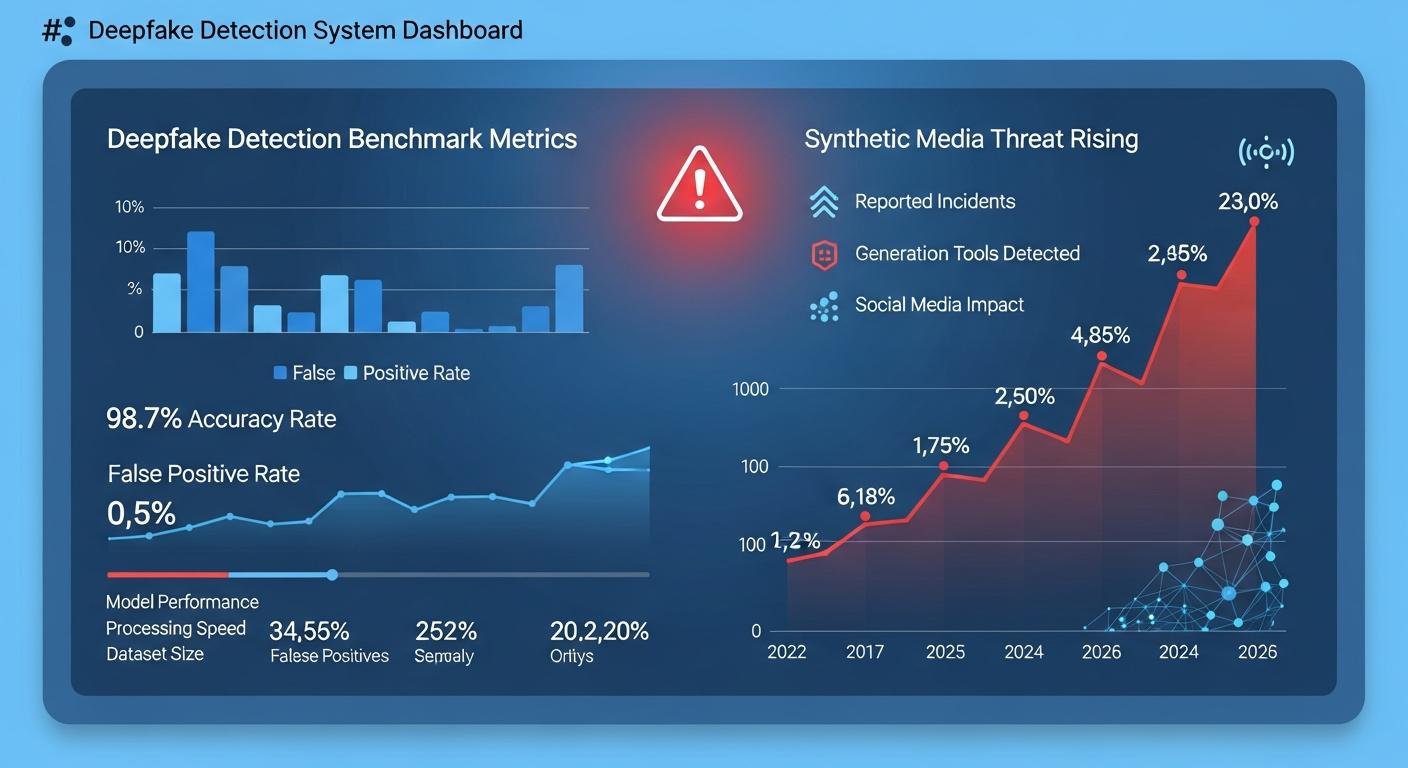

The scale and speed of the problem underpins the urgency. Government material and Home Office analysis project a dramatic rise in deepfake circulation, estimating some eight million items shared in 2025 compared with roughly 500,000 in 2023, a surge officials say reflects cheaper, more accessible generative AI tools. Industry and police advisers have warned that affordability and ease of use mean convincing synthetic media can now be produced without specialist skills. (Sources: Home Office case study; government briefing)

Ministers have framed the drive primarily as a safety measure for individuals vulnerable to exploitation. Minister for Safeguarding and Violence Against Women and Girls Jess Phillips said: "A grandmother deceived by a fake video of her grandchild. A young woman whose image is manipulated without consent." The government has moved to criminalise creating or requesting non‑consensual intimate deepfakes and is preparing further measures to ban tools that produce so‑called “nudification” outputs. (Sources: government briefing)

The initiative is being tested through an operational challenge designed to accelerate collaboration and surface practical lessons. The Deepfake Detection Challenge brings together public sector bodies, security services, academia and private companies in time‑pressured exercises that drop bespoke datasets and live scenarios to assess detection, resilience and response. Independent advisory groups of generative AI researchers have been feeding technical insight into the effort. (Sources: ACE blog; Generative AI Academic Advisory Group report)

Wider concerns about synthetic media extend beyond intimate‑image abuse. Universities, immigration authorities and employers that use automated screening and interview systems face a growing risk of deception, with early studies finding isolated but real instances of manipulated content in candidate assessments. Security services and policing units caution that impersonation and fraud are increasingly sophisticated and that institutions relying on automated checks must build layered verification and live‑interview fallbacks. (Sources: The Guardian; Generative AI Academic Advisory Group report)

Government officials say the evaluation framework will serve as the basis for regulatory expectations and will inform enforcement under the Online Safety Act, where ministers plan to prioritise non‑consensual deepfake offences. The work is presented as part of broader commitments to reduce violence against women and girls and to ensure safety and privacy are embedded into AI system design. Industry participants, law enforcement and academic partners will be asked to adopt the testing outputs as a benchmark for procurement and product development. (Sources: government briefing; Home Office case study)

As detection capabilities are benchmarked and gaps exposed, officials argue the next phase must be as much about prevention and product design as it is about reactive policing. Government materials stress that consistent, repeatable testing will make it easier to require platforms and vendors to mitigate harms before synthetic content spreads widely. (Sources: Home Office case study; government briefing)

Source Reference Map

Inspired by headline at: [1]

Sources by paragraph:

- Paragraph 1: [2], [6]

- Paragraph 2: [2], [3]

- Paragraph 3: [6], [2]

- Paragraph 4: [2]

- Paragraph 5: [3], [5]

- Paragraph 6: [4], [5]

- Paragraph 7: [2], [6]

- Paragraph 8: [6], [2]

Source: Noah Wire Services