New York is pressing ahead with a broad package of measures intended to shape how artificial intelligence is used across the state, proposing a new oversight apparatus and a series of criminal and civil controls aimed at deceptive or harmful AI applications. According to the governor’s office, the initiatives are intended to protect consumers, workers and the integrity of elections while advancing research and development in technologies that serve the public interest. (Sources: Governor’s press releases on proposed protections and the RAISE Act.)

Central to the plan is the proposal to create an Office of Digital Innovation, Governance, Integrity and Trust, a central authority to coordinate digital safety and technology governance across state agencies. The RAISE Act, which New York recently enacted, already establishes a state oversight office within the Department of Financial Services to review large frontier models and requires developers to disclose safety frameworks and incident reports; the new office would build on that oversight architecture and aim to align enforcement of the state’s growing body of AI rules. (Sources: governor.ny.gov coverage of proposed AI protections and the RAISE Act.)

The administration is seeking specific new criminal and disclosure tools to limit manipulative AI outputs. Proposed measures include adding misdemeanour liability for unauthorised use of a person’s voice in advertising, creating a private right of action for digitally altered false images, and requiring clear provenance and disclosure for digitised political communications published within 60 days of an election. The state has also taken executive action to block particular foreign-linked AI applications from state devices and networks, citing cybersecurity and national-security concerns. (Sources: governor.ny.gov announcements on deceptive AI legislation and the DeepSeek ban.)

Alongside rules for synthetic media, New York is pursuing consumer-privacy reforms and market transparency mandates. The administration has proposed a data-broker registration regime with a centralised deletion request mechanism and continues to expand laws that force disclosure when advertising uses a “synthetic performer” or when prices are set by algorithmic means. These steps follow prior legislation enacted to increase transparency and to shield vulnerable populations from AI-enabled harms. (Sources: governor.ny.gov materials on proposed protections, the RAISE Act and FY26 budget legislation.)

The package pairs regulatory action with investment in capacity building. The state is piloting an AI training programme for 1,000 state employees that combines classroom instruction with a generative-AI tool, and it has expanded the Empire AI Consortium with capital funding to boost computing power and add academic and medical research partners. Officials describe the combined approach as aiming both to manage risks and to cultivate AI research focused on public-good applications. (Sources: governor.ny.gov announcements on the AI training pilot and Empire AI expansion.)

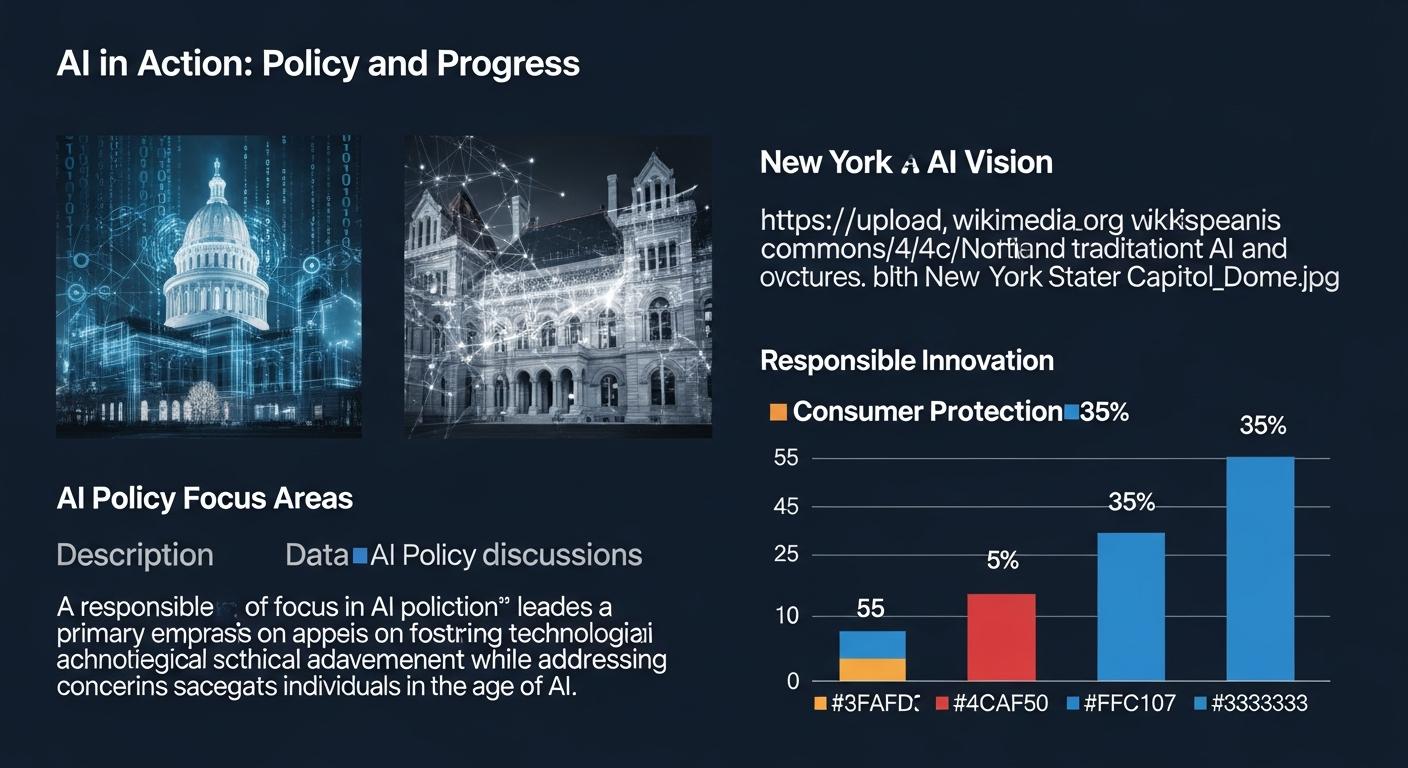

Taken together, the initiatives underline New York’s ambition to set national benchmarks for AI governance by coupling enforcement, criminal penalties and transparency with public investment in research and workforce readiness. Supporters say the measures create guardrails while promoting responsible innovation; advocates for industry have argued that overlapping state rules may complicate compliance. The state’s recent statutes and proposals place it among those U.S. jurisdictions seeking a more assertive regulatory posture toward advanced AI. (Sources: governor.ny.gov reporting on the RAISE Act and proposed protections.)

Source Reference Map

Inspired by headline at: [1]

Sources by paragraph:

- Paragraph 1: [2], [4]

- Paragraph 2: [4], [6]

- Paragraph 3: [2], [3]

- Paragraph 4: [2], [4]

- Paragraph 5: [5], [6]

- Paragraph 6: [4], [2]

Source: Noah Wire Services