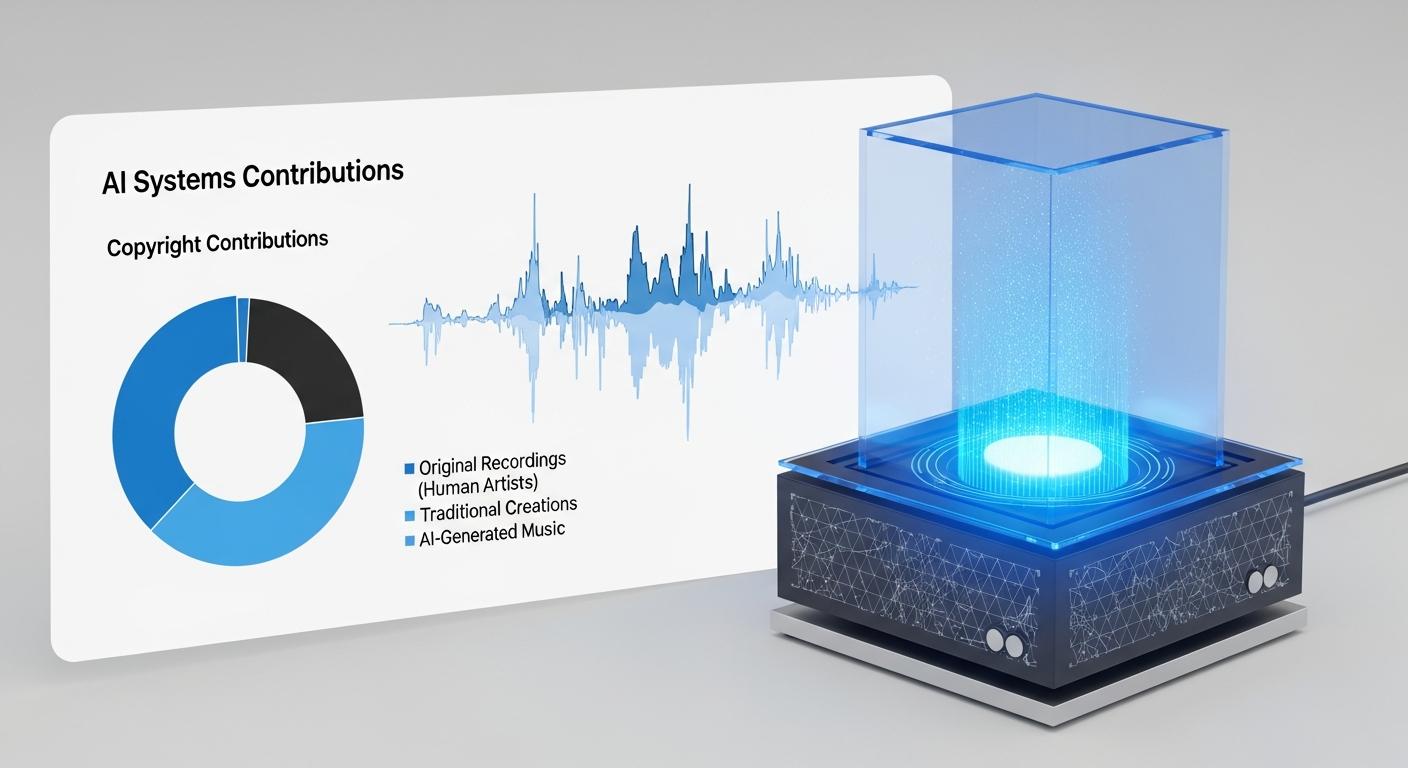

Sony Group has built an experimental system that it says can identify which copyrighted recordings contributed to an AI‑generated song and estimate how much each original work contributed. According to Sony AI, the technique can detect multiple source recordings inside a single output and produce percentage estimates that could be used by rights holders to assess unauthorised use and pursue royalties. [2],[1]

The technology is described as operating in two distinct modes. When developers of a generative model cooperate, Sony AI says it can inspect training data and attribute particular model behaviours to individual tracks or artists; when developers decline access, the system instead compares generated audio against commercial catalogues to infer likely sources and their relative influence. An academic paper on the approach has been accepted for presentation at an international conference, signalling peer review of the method. [2]

Sony’s effort arrives amid mounting tension between record companies and AI firms over training data. Sony Music has warned hundreds of technology companies against using its recordings without permission, and the major has moved to protect its interests both through legal action and investments in third‑party rights technology. Industry observers note the company has begun to pair technical detection tools with commercial initiatives aimed at licensing and monitoring. [7],[3],[1]

The broader market shows mixed responses: several major labels are suing generative AI services that they say trained models on copyrighted material without licences, while others have negotiated settlements and commercial partnerships with AI platforms. Universal Music’s settlement and tie‑up with an AI generator that included licensing terms illustrates one path labels are taking to convert dispute into revenue opportunities. Those deals, however, have sometimes provoked user backlash when product features were restricted as part of agreements. [1],[6]

Streaming platforms are also reacting. Deezer, for example, has begun labelling tracks created with AI and says a rising share of daily uploads are fully AI‑generated, prompting the company to flag and exclude suspected fraudulent plays from royalty pools. Industry data cited by platforms highlight a sharp growth in AI‑originated submissions that services are having to detect and manage. [4],[5]

If adopted widely, detection tools like Sony’s could underpin new licensing frameworks that channel revenue back to songwriters, performers and producers. Their impact will depend on technical accuracy, the willingness of AI developers to grant access, and whether independent verification mechanisms are accepted by courts, platforms and rights owners. Sony has not announced a commercial roll‑out date for the system, and industry observers caution that technical capability alone will not resolve legal and commercial disputes over training data. [2],[3],[1]

Source Reference Map

Inspired by headline at: [1]

Sources by paragraph:

- Paragraph 1: [2], [1]

- Paragraph 2: [2]

- Paragraph 3: [7], [3], [1]

- Paragraph 4: [1], [6]

- Paragraph 5: [4], [5]

- Paragraph 6: [2], [3], [1]

Source: Noah Wire Services