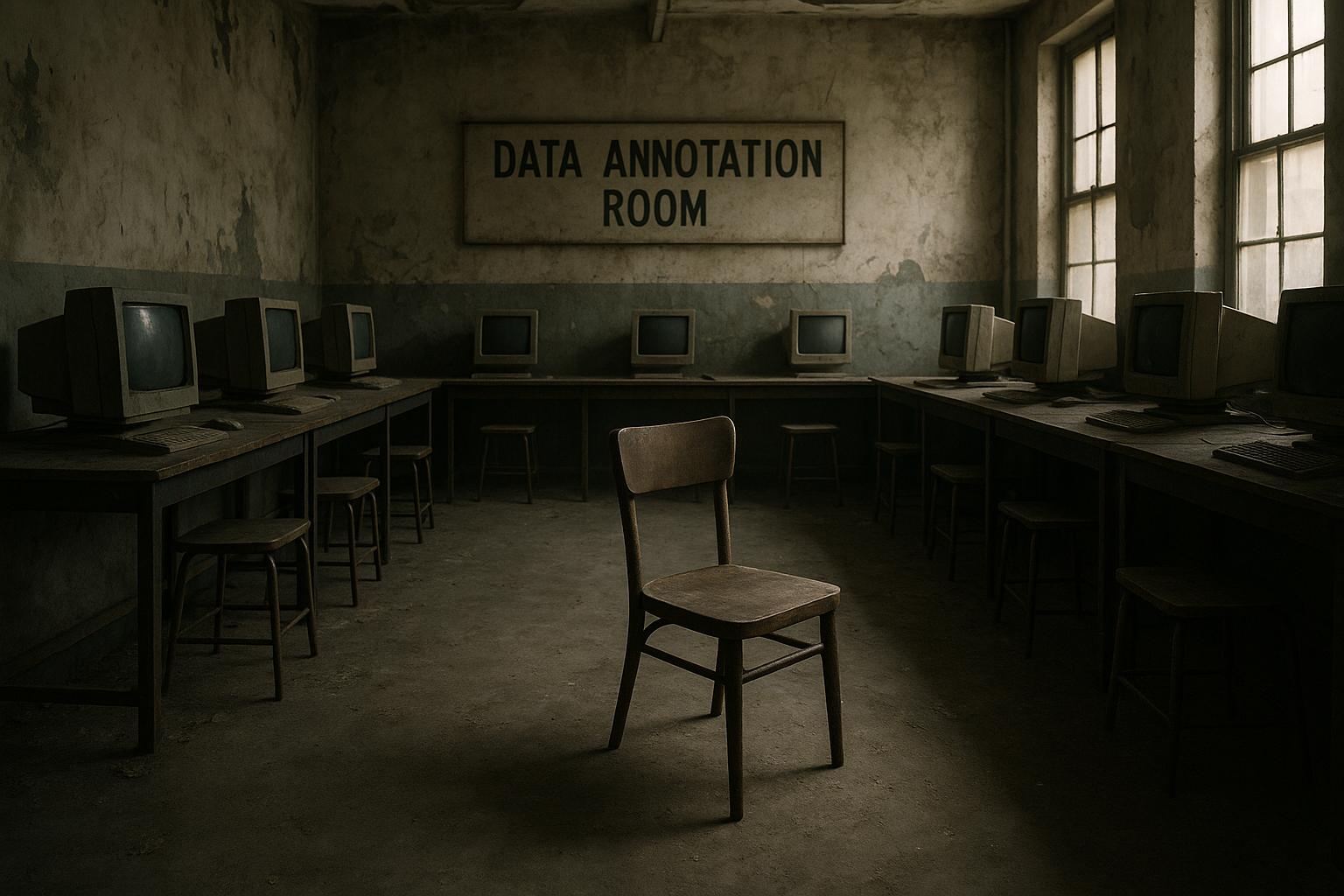

Elon Musk's AI company, xAI, has recently undergone a significant restructuring that resulted in the layoff of at least 500 employees from its data annotation team, the largest workforce cut the firm has made thus far. These workers, primarily generalist AI tutors, played a key role in training Grok, xAI's chatbot, by categorising and contextualising raw data to help the AI understand and interact with the world more effectively. The layoffs, communicated abruptly through late-night emails, included immediate revocation of the affected employees' access to internal systems, although they will continue to receive pay until the end of their contracts or until November 30.

According to reports, this strategic pivot marks a shift in xAI's approach, emphasizing the expansion of specialist AI tutors rather than generalist roles. The company has announced plans to increase its specialist AI tutor team by tenfold, signalling a move toward more focused, expert-driven training methods for Grok. This shift comes amid broader organisational changes, including the departure of xAI's finance chief, Mike Liberatore, earlier in July. The firm, founded in 2023 by Musk with the aim of competing against established tech giants in AI, has positioned itself as critical of what it perceives as over-censorship and insufficient safety protocols in the industry.

The manner in which the layoffs were conducted has sparked discussions about ethical labour practices within AI companies and the technology sector at large. Critics argue that the blunt and impersonal approach—abrupt email notifications combined with immediate system access termination—reflects poorly on the company's treatment of its workforce. Such practices risk alienating current and prospective talent, particularly in high-stakes, competitive industries where job security and fair treatment are paramount. This event highlights the ongoing tensions between rapid innovation in AI development and the responsible management of human resources behind these technologies.

On a societal level, as AI systems like Grok become more sophisticated and operate with greater transparency through specialist training, there is potential for broader benefits. More trustworthy AI applications, which interact constructively with users and minimise misinformation and bias, could enhance adoption across critical sectors such as education and healthcare. The shift towards specialist AI tutors might contribute to the development of such reliable systems, potentially fostering greater societal trust in AI technologies in the long run.

📌 Reference Map:

Source: Noah Wire Services